Table of Contents

- Introduction

- Overview of Azure Blob Storage Account Operational Backup and Storage Management Policy

- Azure Storage Account Management Policy

- Azure Blob Storage Account Operational Backup

- Prerequisites

- Creating a Terraform Module for Azure Blob Storage Backup

- Implementing Multiple Rules with for_each

- Outputs

- Conclusion

- Useful Links

Introduction

In this blog post, I continue our journey into managing Azure resources using Terraform. As a follow-up to our previous posts (Part 1, Part 2) on Azure Recovery Services Vault, we'll now explore how to leverage data lifecycle management using the azurerm_storage_management_policy Terraform resource.

Worth mentinoning, that Azure Blob Storage Account Operational Backup and Azure Storage Account Management Policy are two different features in Azure that serve different purposes and can be used together for comprehensive data protection and management of Azure Blob Storage.

Azure Blob Storage Account Operational Backup provides point-in-time backups for Azure Blob Storage. With this feature, you can schedule backups and retain them for a specified period of time. This allows you to recover your data in case of accidental deletion, corruption, or other data loss scenarios. The backups are stored in a separate storage account, which provides additional redundancy and security for your data.

On the other hand, Azurerm_storage_management_policy is a feature that enables you to define and enforce policies for managing Azure Blob Storage accounts. With this feature, you can specify rules for data retention, tiering, and deletion, as well as enforce compliance requirements and automate data management tasks. This feature enables you to apply policies to one or more storage accounts, which provides consistency and scalability across your organization.

Using Azure Blob Storage Account Operational Backup and Azure Storage Account Management Policy together can provide a comprehensive data protection and management solution for your Azure Blob Storage environment. You can use operational backups for data recovery and retention, and storage management policies for enforcing data management rules and automating tasks. This can help you ensure that your data is protected and managed efficiently, while reducing the risk of data loss and non-compliance.

Let's break down the differences between the two:

Overview of Azure Blob Storage Account Operational Backup and Storage Management Policy

Azure Storage Account Management Policy is a configuration object used in the Azure Resource Manager (ARM) Terraform provider for defining and managing data lifecycle policies in Azure Blob Storage. These policies help you automate the transition of data between different storage tiers (Hot, Cool, and Archive) and manage the deletion of expired data based on your business needs.

Key features of Azure Storage Account Management Policy include:

-

Defining rules for transitioning data between different storage tiers (Hot, Cool, and Archive) based on age and access patterns

-

Configuring the deletion of expired or older data, freeing up storage space and reducing costs

-

Managing data lifecycle policies using Terraform, a popular Infrastructure as Code (IaC) tool

Azure Blob Storage Account Operational Backup

Operational Backup is a feature provided by Azure that allows you to automatically create backups of your blob storage account data. This feature is designed to protect your data from accidental deletion, modification, or corruption. It creates periodic, incremental, and fully-managed backups of your blob data, ensuring that your data remains safe and recoverable.

Key features of Operational Backup include:

-

Automatic and incremental backups of blob data

-

Point-in-time restore capability, allowing you to restore data to a specific point in time

-

Retention of backups for a specified period, based on your backup policy

-

Support for both block blobs and append blobs

In summary, Azure Blob Storage Account Operational Backup is designed to protect your data from accidental deletion or corruption by creating managed backups of your blob data, while Azure Storage Account Management Policy helps you automate data lifecycle management, including the transition between storage tiers and the deletion of expired data using Terraform.

Prerequisites for using Azure Storage Account Management Policy via Terraform azurerm_storage_management_policy resource block

Before diving into the tutorial, ensure you have the following:

- An Azure account with necessary permissions.

- Terraform installed on your local machine (version 1.5.x or newer).

- The Azure CLI installed and authenticated with your Azure account.

Understanding Azure Blob Storage and Storage Management Policies Azure Blob Storage is a service for storing large amounts of unstructured data, such as text or binary data. It is a scalable, cost-effective, and reliable storage solution for various purposes, including backups.Azure Storage Management Policies provide a way to manage the lifecycle of your Blob Storage data by automating actions such as tiering, archiving, or deleting blobs based on specified rules.

In this tutorial, I will use Terraform to create an Azure Storage Account, configure a Storage Management Policy with custom rules, and apply these rules to backup Blob containers.

Creating a Terraform Module for Azure Blob Storage Backup

We'll start by creating a Terraform module that sets up an Azure Storage Account and configures the azurerm_storage_management_policy. The module will use variables to allow customization of the Storage Account properties and backup rules.

First, create the main Terraform configuration file that uses the custom module.

Then, create the following files:

main.tf

//add other resources if required

resource "azurerm_storage_account" "storage_account" {

name = var.storage_account_name

resource_group_name = var.resource_group_name

location = var.location

account_kind = var.account_kind

account_tier = var.account_tier

access_tier = var.access_tier

enable_https_traffic_only = true

}

resource "azurerm_storage_management_policy" "storage_management_policy" {

for_each = var.rules

storage_account_id = azurerm_storage_account.storage_account.id

rule {

name = each.value.name

enabled = true

filters {

prefix_match = each.value.prefix_match

blob_types = ["blockBlob"]

}

actions {

base_blob {

tier_to_cool_after_days_since_modification_greater_than = each.value.base_blob.tier_to_cool_days

tier_to_archive_after_days_since_modification_greater_than = each.value.base_blob.tier_to_archive_days

delete_after_days_since_modification_greater_than = each.value.base_blob.delete_after_days_since_modification

}

snapshot {

delete_after_days_since_creation_greater_than = each.value.snapshot_age

}

version {

delete_after_days_since_creation = each.value.version_age

}

}

}

}

With these files in place, you can now create a Storage Account and configure a Storage Management Policy with customizable rules.

Implementing Multiple Rules with for_each

In some cases, you might need to apply multiple rules to your Storage Management Policy. To achieve this, you can use the for_each meta-argument to iterate over a map of rules.

First, create the variables.tf file inside to include a variable for multiple rules:

You can provide multiple rules using the rules variable with default values in the variables.tf file:

variable "storage_account_name" {

type = string

description = "The name of the Storage Account."

default = "mystorageaccount"

}

variable "resource_group_name" {

type = string

description = "The name of the Resource Group where the Storage Account is located."

default = "myresourcegroup"

}

variable "location" {

type = string

description = "The location where the Storage Account is created."

default = "East US"

}

variable "account_kind" {

type = string

description = "The kind of the Storage Account."

default = "StorageV2"

}

variable "account_tier" {

type = string

description = "The tier of the Storage Account."

default = "Standard"

}

variable "access_tier" {

type = string

description = "The access tier of the Storage Account."

default = "Hot"

}

variable "rules" {

type = map(object({

name = string

prefix_match = list(string)

base_blob = object({

tier_to_cool_days = number

tier_to_archive_days = number

delete_after_days_since_modification = number

})

snapshot_age = number

version_age = number

}))

default = {

rule1 = {

name = "sample_rule1"

prefix_match = []

base_blob = {

tier_to_cool_days = 30

tier_to_archive_days = 180

delete_after_days_since_modification = 365

}

snapshot_age = 60

version_age = 90

},

rule2 = {

name = "sample_rule2"

type = "Lifecycle"

prefix_match = ["archive/"]

base_blob = {

tier_to_cool_days = null

tier_to_archive_days = 60

delete_after_days_since_modification = null

}

snapshot_age = null

version_age = null

}

}

}

With these changes, the module now supports multiple rules with default values, and you can add more rules by simply updating the rules variable.

Outputs

// add the following outputs if required

output "storage_account" {

value = azurerm_storage_account.storage_account

description = "The created Storage Account resource."

}

output "storage_management_policy" {

value = azurerm_storage_management_policy.storage_management_policy

description = "The created Storage Management Policy resources."

}

output "storage_account_primary_access_key" {

value = azurerm_storage_account.storage_account.primary_access_key

description = "The primary access key for the Storage Account."

sensitive = true

}

output "storage_account_secondary_access_key" {

value = azurerm_storage_account.storage_account.secondary_access_key

description = "The secondary access key for the Storage Account."

sensitive = true

}

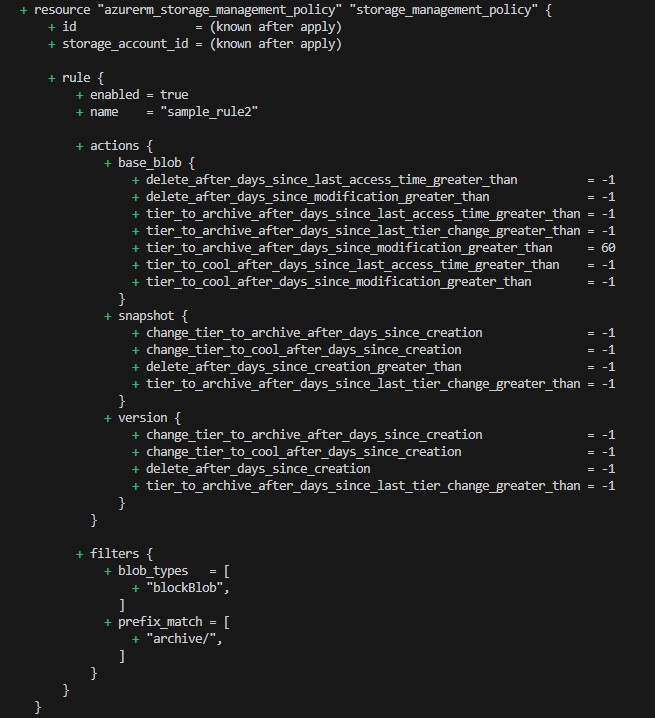

After applying terraform configuration , our account will be created with the below rules:

azurerm_storage_management_policy Rule 1

azurerm_storage_management_policy Rule 2

Conclusion

In this blog article, I have demonstrated how to to leverage data lifecycle management for Azure Blob Storage containers using the azurerm_storage_management_policy Terraform resource. By creating a custom Terraform module, I've enabled the automation and customization of Azure Blob Storage backup policies, offering flexibility to adapt to different requirements.

With the ability to implement multiple rules and iterate over them using the for_each meta-argument, you can now create a comprehensive and efficient backup strategy for your Azure Blob Storage data.

With the ever-growing importance of data security and reliability, leveraging Terraform and Azure Storage Management Policies is a valuable addition to your toolbox for managing and protecting your data in the Azure cloud.

Useful Links

Here’s a list of helpful resources related to the concepts and tools discussed in the article. These links provide additional context, documentation, and guides to deepen your understanding of Azure Blob Storage lifecycle management and Terraform:

Azure Documentation

-

Azure Blob Storage Overview

Learn about Azure Blob Storage, its features, and use cases. -

Azure Storage Account Management Policies

Understand how to configure lifecycle management policies for Azure Storage Accounts. -

Azure Blob Storage Operational Backup

Explore Azure Blob Storage Operational Backup features for point-in-time recovery.